The problem: I keep getting connection refused when trying to view the consoles of VMs. Why?

Background: My home lab runs Proxmox on 3 physical machines (moon1, 2, and 3). Each Proxmox host runs several VMs. To access the Proxmox Web UI, keepalived+haproxy is configured to provide a single IP address for loadbalancing and TCP-connection proxying to the Proxmox UI via https://proxmox.mylocal.lan. From the Proxmox UI, when I click on Console for a particular VM, I’m greeted with the following error:

My hypothesis is that each HTTPS request into haproxy is bouncing between the three moon nodes, and doesn’t keep any sort of persistence to one across a session.

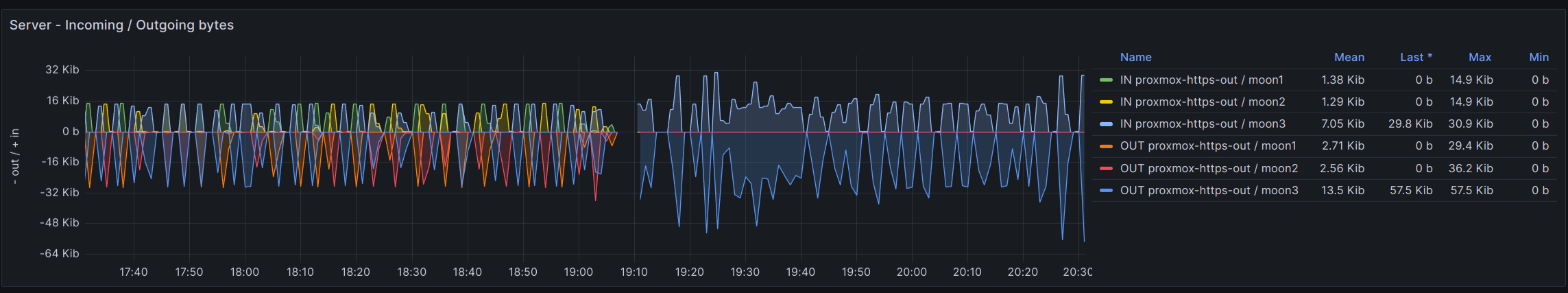

This Grafana graph showing the outbound connections for the haproxy backend confirms that.

On the left side of the graph before 19:10, connections are bouncing between all three ‘OUT’ nodes. There’s no consistency between which backend receives the connection. I’m the only person accessing Proxmox at this time, so it’s only one client making queries.

To test my theory of whether the lack of persistent connections to a single backend was the cause, I looked into haproxy’s docs to see if there was a way to set some sort of persistency/affinity.

Enter the balance keyword.

The finished configuration:

frontend proxmox-https-in

mode tcp

bind 10.10.0.2:443

default_backend proxmox-https-out

backend proxmox-https-out

mode tcp

balance source

server moon1 10.10.0.11:8006 check

server moon2 10.10.0.12:8006 check

server moon3 10.10.0.13:8006 check

And voila, looking at the above traffic image, my traffic sticks to moon3, and console connections work!

I need to try with something like an http traffic generator to confirm that this works for more clients, but for me, it works.

What I still don’t understand:

If I only attached the ‘balance’ configuration stanza to a backend config block, why are all the connections inbound to haproxy sticking to one host? The inbound connection selection is done by keepalived, which is used to share a VIP among three the haproxy hosts. But if that IP is sticky (keepalived uses a primary/secondaries configuration), why are the inbound connections to haproxy all sticking to one host now?

Summary:

I fixed my Proxmox console issues by having sessions stick to one Proxmox host behind haproxy. Is it perfect? No. Load could shift drastically when a host goes down. Does it cover 90% of my use case? Yes. Good enough to declare victory and move on.

Sources: